Mistral Large

Overview

Mistral AI releases Mistral Large, their most advanced large language model (LLM) with strong multilingual, reasoning, maths, and code generation capabilities. Mistral Large is made available through Mistral platform called la Plataforme and Microsoft Azure. It's also available to test in their new chat app, le Chat.

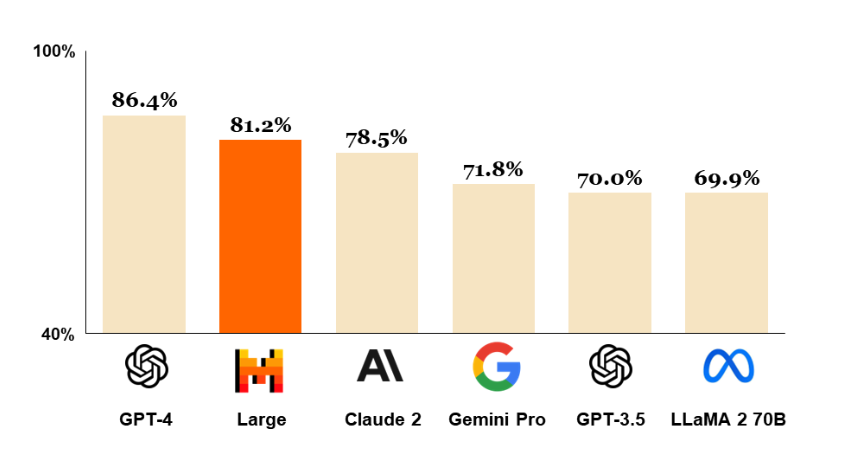

Below is a chart showing how Mistral Large compares with other powerful LLMs like GPT-4 and Gemini Pro. It ranks second next to GPT-4 on the MMLU benchmark with a score of 81.2%.

Key Capabilities

Mistral Large's capabilities and strengths include:

- 32K tokens context window - Extended context for complex tasks

- Native multilingual capacities - Fluent in English, French, Spanish, German, and Italian

- Strong reasoning capabilities - Advanced logical thinking and problem-solving

- Mathematical proficiency - Excellent performance on math benchmarks

- Code generation - Strong coding and programming capabilities

- Function calling - Native support for function calling and JSON format

- Moderation policies - Allows developers to design precise instruction-following policies

- Low-latency option - Mistral Small model for optimized performance

Performance Benchmarks

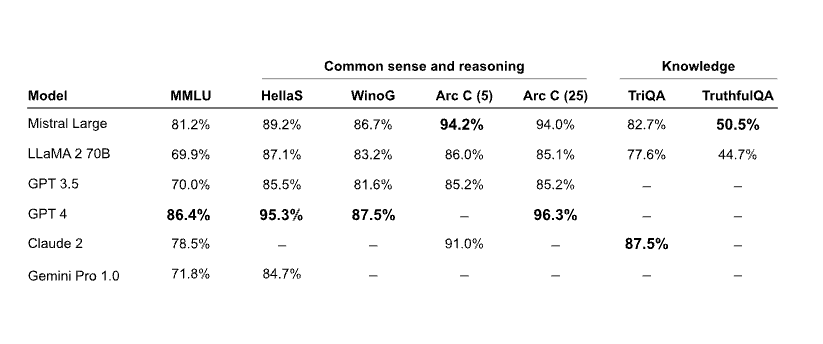

Reasoning and Knowledge

The table below shows how Mistral Large performs on common reasoning and knowledge benchmarks. It largely falls behind GPT-4 but it's the superior model compared to other LLMs like Claude 2 and Gemini Pro 1.0.

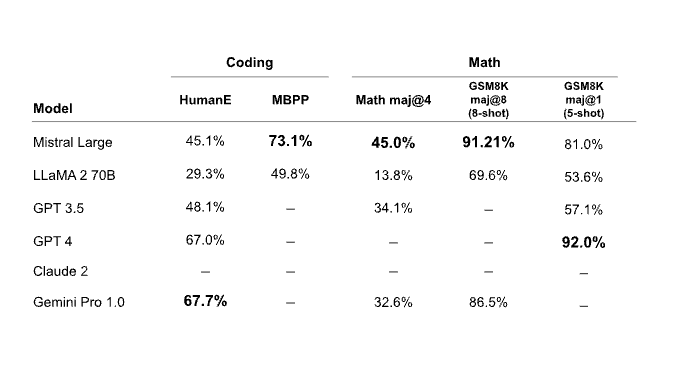

Mathematics & Code Generation

The table below shows how Mistral Large performs on common maths and coding benchmarks. Mistral Large demonstrates strong performance on the Math and GSM8K benchmarks but it is significantly outperformed on coding benchmarks by models like Gemini Pro and GPT-4.

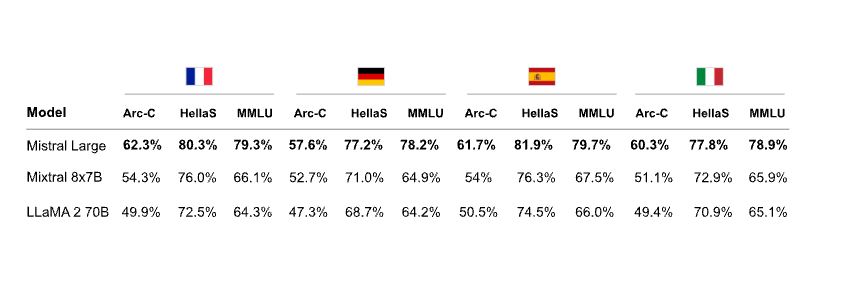

Multilingual Capabilities

The table below demonstrates Mistral Large performance on multilingual reasoning benchmarks. Mistral Large outperforms Mixtral 8x7B and Llama 2 70B in all languages, including French, German, Spanish, and Italian.

Model Variants

Mistral Small

In addition to the release of Mistral Large, a smaller and optimized model called Mistral Small is also announced. Mistral Small is optimized for low-latency workloads and outperforms Mixtral 8x7B. Mistral AI reports that this model has strong capacities around:

- RAG-enablement - Enhanced retrieval-augmented generation capabilities

- Function calling - Native support for function calling

- JSON format - Built-in JSON formatting support

- Low-latency - Optimized for fast response times

Access and Deployment

Available Platforms

- Mistral la Plataforme - Mistral AI's native platform

- Microsoft Azure - Enterprise deployment option

- le Chat - Mistral's new chat application for testing

Endpoints and Model Selection

Mistral AI provides multiple endpoints for different use cases and has published a comprehensive guide on better model selection when considering performance and cost trade-offs.

Key Takeaways

- Performance Leader: Ranks second to GPT-4 on MMLU benchmark (81.2%)

- Multilingual Excellence: Superior performance across European languages

- Reasoning Strength: Strong capabilities in logical reasoning and knowledge tasks

- Mathematical Proficiency: Excellent performance on math benchmarks

- Enterprise Ready: Available through major cloud platforms

- Cost Optimization: Multiple model variants for different performance needs

Related Topics

- Mistral 7B LLM - Mistral's open-source 7B parameter model

- Mixtral 8x7B - Mistral's mixture-of-experts model

- GPT-4 - OpenAI's most advanced language model

- Gemini Pro - Google's advanced language model

- Claude Models - Anthropic's AI assistant models