Active-Prompt

Overview

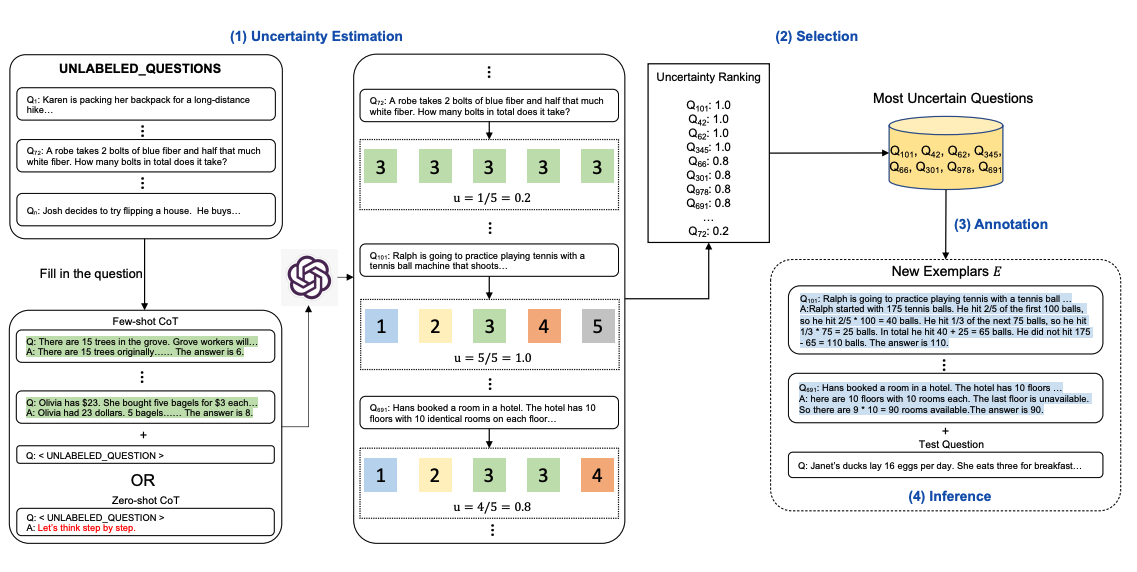

Chain-of-thought (CoT) methods rely on a fixed set of human-annotated exemplars. The problem with this is that the exemplars might not be the most effective examples for the different tasks. To address this, Diao et al., (2023) recently proposed a new prompting approach called Active-Prompt to adapt LLMs to different task-specific example prompts (annotated with human-designed CoT reasoning).

How It Works

Below is an illustration of the approach. The first step is to query the LLM with or without a few CoT examples. k possible answers are generated for a set of training questions. An uncertainty metric is calculated based on the k answers (disagreement used). The most uncertain questions are selected for annotation by humans. The new annotated exemplars are then used to infer each question.

Image Source: Diao et al., (2023)

Key Benefits

- Adaptive Exemplars: Automatically selects the most effective examples for specific tasks

- Uncertainty-Based Selection: Uses disagreement metrics to identify challenging cases

- Human-in-the-Loop: Incorporates human expertise for optimal exemplar selection

- Task-Specific Optimization: Tailors prompts to individual task requirements

Applications

- Complex reasoning tasks

- Mathematical problem solving

- Multi-step logical reasoning

- Task-specific prompt optimization

Related Topics

- Chain-of-Thought Prompting - Understanding CoT prompting techniques

- Few-Shot Prompting - Learning from examples

- Prompt Engineering Guide - General prompt engineering techniques

References

- Diao et al., (2023) - Active-Prompt: Active Prompting with Chain-of-Thought for Large Language Models