Phi-2

Overview

In this guide, we provide an overview of Phi-2, a 2.7 billion parameter language model, how to prompt Phi-2, and its capabilities. This guide also includes tips, applications, limitations, important references, and additional reading materials related to Phi-2 LLM.

Phi-2 Introduction

Phi-2 is the latest small language model (SLM) released by Microsoft Research. Phi-2 follows the previous Phi-1 model and Phi-1.5 models.

Model Evolution

Phi-1

- Parameters: 1.3 billion parameters

- Training Data: "Textbook quality" data from the web (6B tokens) + synthetically generated textbooks and exercises with GPT-3.5 (1B tokens)

- Performance: Excels at Python code generation tasks

- Reference: (Gunasekar et al. 2023)

Phi-1.5

- Focus: Common sense reasoning and language understanding capabilities

- Capabilities: Complex reasoning tasks such as grade-school mathematics and basic coding tasks

- Performance: Comparable to models 5 times larger

Phi-2

- Parameters: 2.7 billion parameters

- Improvements: Enhanced reasoning and language understanding capabilities

- Performance: Outperforms models up to 25x larger

- License: MIT License (usable in commercial settings)

Phi-2 Insights & Evaluation

Research Focus

LLM researchers are keen to explore whether small language models have similar emergent capabilities as their large counterparts and if there are techniques for training that can help achieve this.

Training Details

- Data: "Textbook-quality" data (1.4 trillion tokens with multiple passes)

- Synthetic Datasets: Help teach common sense reasoning and general knowledge

- Augmentation: Educational and high-quality web content

- Training Time: 14 days on 96 A100 GPUs

- Fine-tuning: No additional RLHF or instruction tuning applied

Knowledge Transfer

Phi-1.5 knowledge is transferred to Phi-2, which helps in:

- Model convergence

- Performance boost across several benchmarks

Performance Comparison

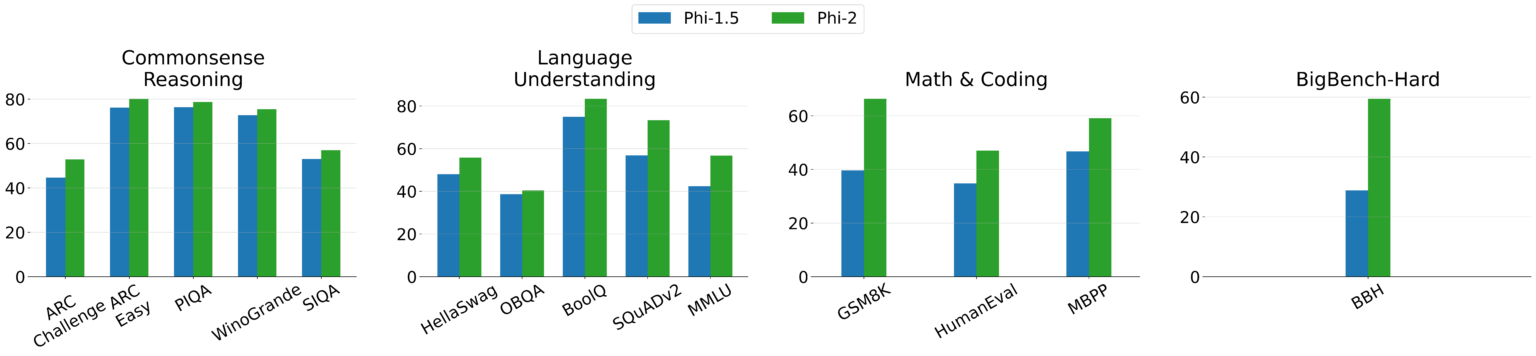

The figure below demonstrates the performance comparison between Phi-2 (2.7B) and Phi-1.5 (1.3B) on:

- Common sense reasoning

- Math reasoning

- Code generation

- Other language understanding benchmarks

Note: All tasks are evaluated using 0-shot, except BBH (3-shot CoT) and MMLU (5-shot).

Safety Performance

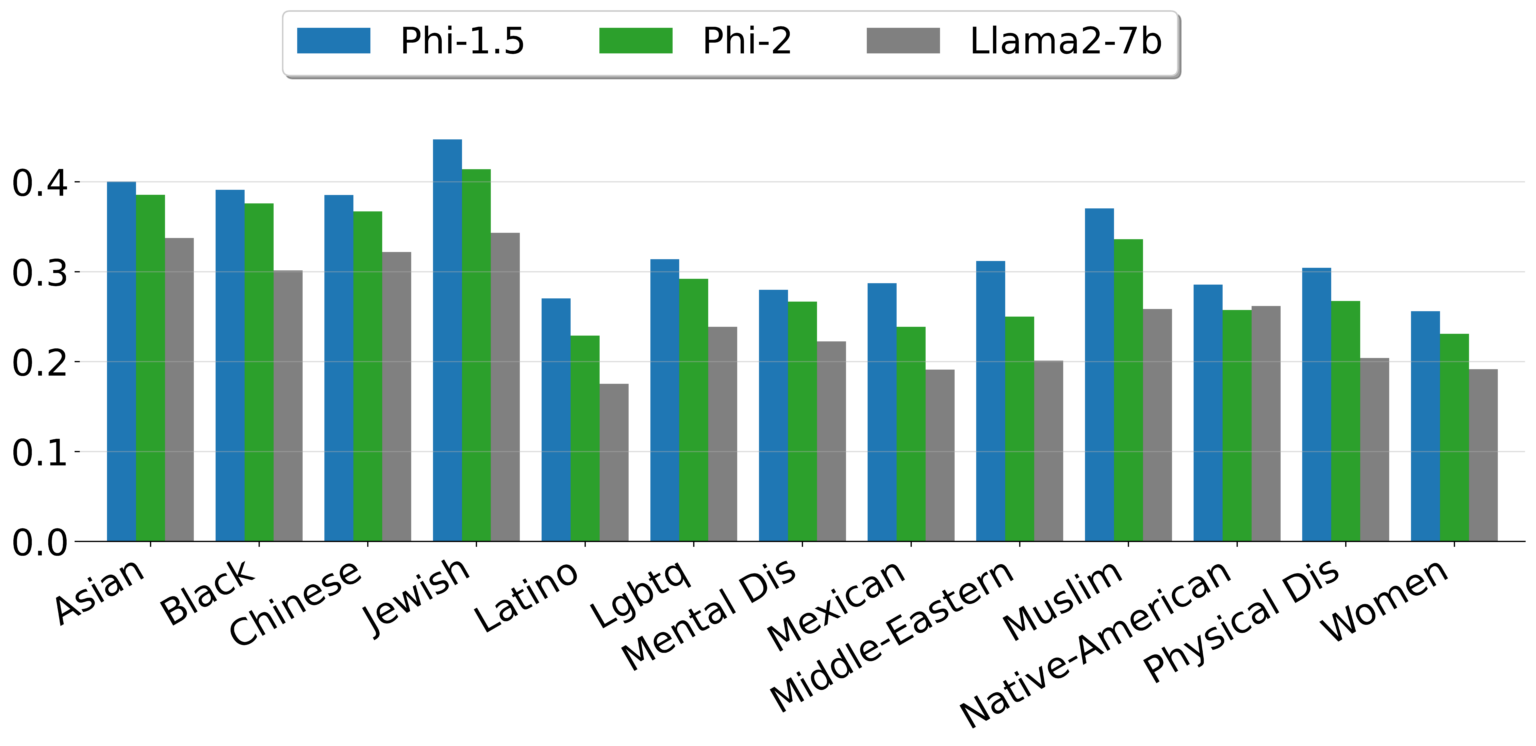

Safety Comparison

While the model wasn't aligned with special techniques like RLHF, it's reported to be safer in terms of toxicity and bias compared to the aligned open-source Llama2-7b. The authors attribute this to data curation efforts.

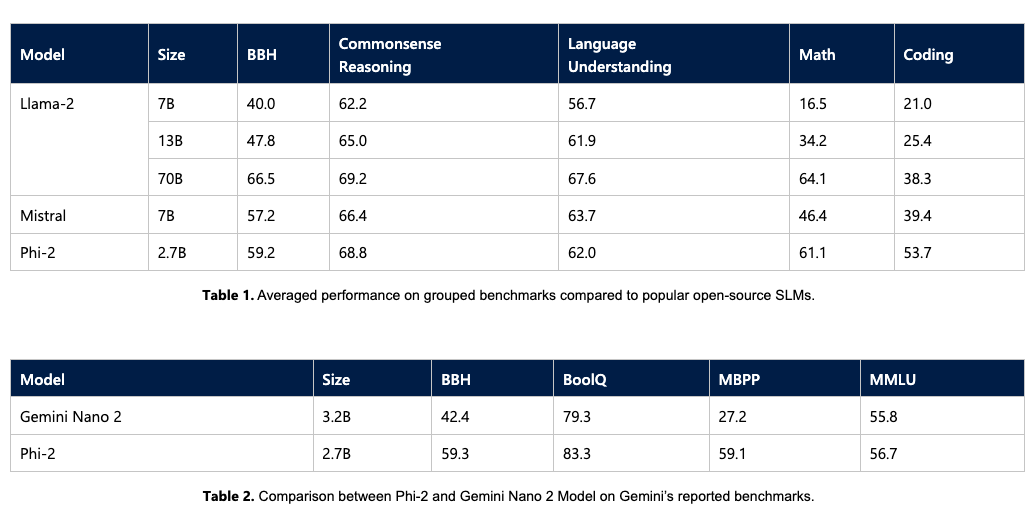

Benchmark Performance

As shown in the figure below, Phi-2 outperforms:

- Mistral 7B

- Llama 2 (13B)

- Llama-2-70B on multi-step reasoning

- Google's Gemini Nano 2

Capability Examples

Below, we provide several prompt examples that demonstrate the capabilities of the Phi-2 model on various tasks.

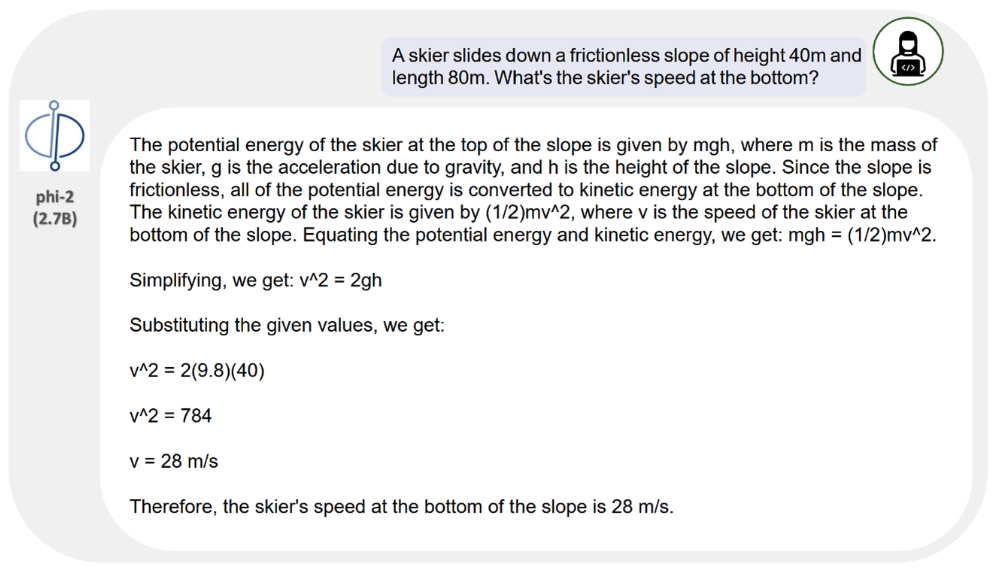

Physics Problem Solving

The example below demonstrates the ability of Phi-2 to solve physics word problems:

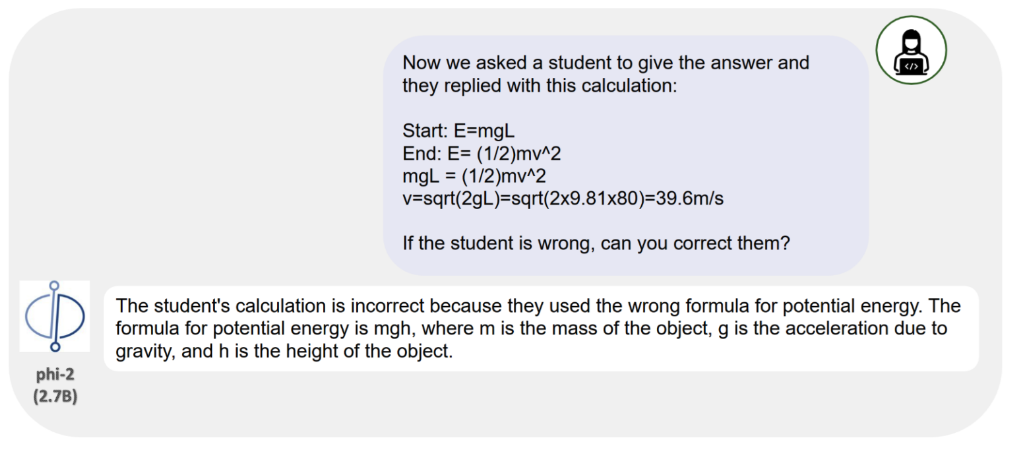

Error Identification

The example below demonstrates the ability of Phi-2 to identify mistakes in a student's calculation for a physics word problem:

Phi-2 Usage

According to the model page, Phi-2 can be prompted using three formats:

- QA format

- Chat format

- Code format

Below we demonstrate how to effectively use these prompt templates in different scenarios.

QA Format

Use case: Scenarios where you ask the model a question and want a concise answer in return.

Prompt Template:

Instruct: {{prompt}}

Output:Example

Prompt:

Instruct: What is the difference between data and information?

Output:Output:

Great question! Data refers to raw facts, statistics, or numbers that have not been analyzed or processed into any meaningful context. On the other hand, information is data that has been organized, analyzed, and interpreted in a way that it can be used by humans to make informed decisions or take actions. In other words, data is raw material, while information is processed and useful knowledge.

Chat Format

The chat format used by Phi-2 is a standard format. Here's a simple example:

Prompt:

Human: Hello, who are you?

AI: Greetings! I am an AI research assistant. How can I help you today?

Human: Can you tell me about the creation of black holes?

AI:Output:

Black holes form when a massive star runs out of fuel and collapses under its own gravitational pull. The resulting object is so dense that it distorts space-time around it, causing anything that comes too close to be pulled in by the immense gravity. This includes light, which cannot...

Code Format

Below is a code generation prompt template that provides the name of the function to the model:

Prompt:

def multiply(a,b):Important Note: The model has been trained on limited Python code examples and libraries, so it's quite limited in its code generation capabilities and language support.

Phi-2 Limitations

Below is a summary of limitations of Phi-2, as reported by the authors:

Content Generation

- Inaccuracy: Similar to other models, Phi-2 may generate inaccurate code and statements

- Instruction Following: Not instruction-tuned like other models, may struggle to follow instructions

- Verbosity: Tends to generate verbose responses, sometimes producing irrelevant extra text

Language and Comprehension

- Language Support: Training consists of standard English; may struggle with slang and fail to comprehend instructions from other languages

- Training Data: Primarily textbooks, which may contribute to verbose responses

Safety and Bias

- Societal Biases: May produce societal biases and toxic content

- Safety: While improved over previous versions, still has limitations

Note: The authors suggest that verbosity is probably due to the nature of the training dataset, which is primarily textbooks.

Figure Sources: Microsoft Research

Key Takeaways

- Small but Powerful: 2.7B parameters outperforming models up to 25x larger

- Textbook Quality Training: 1.4T tokens of high-quality educational content

- Strong Reasoning: Excels at common sense reasoning and mathematics

- Safety Improvements: Better toxicity and bias performance than comparable models

- Multiple Formats: Supports QA, chat, and code generation

- Commercial Ready: MIT License for commercial use

- Limitations: Limited code generation, instruction following challenges, potential verbosity