LLaMA: Open and Efficient Foundation Language Models

Overview

Note: This section is under heavy development.

What's New?

This paper introduces a collection of foundation language models ranging from 7B to 65B parameters.

Training Data

The models are trained on trillions of tokens with publicly available datasets.

Research Context

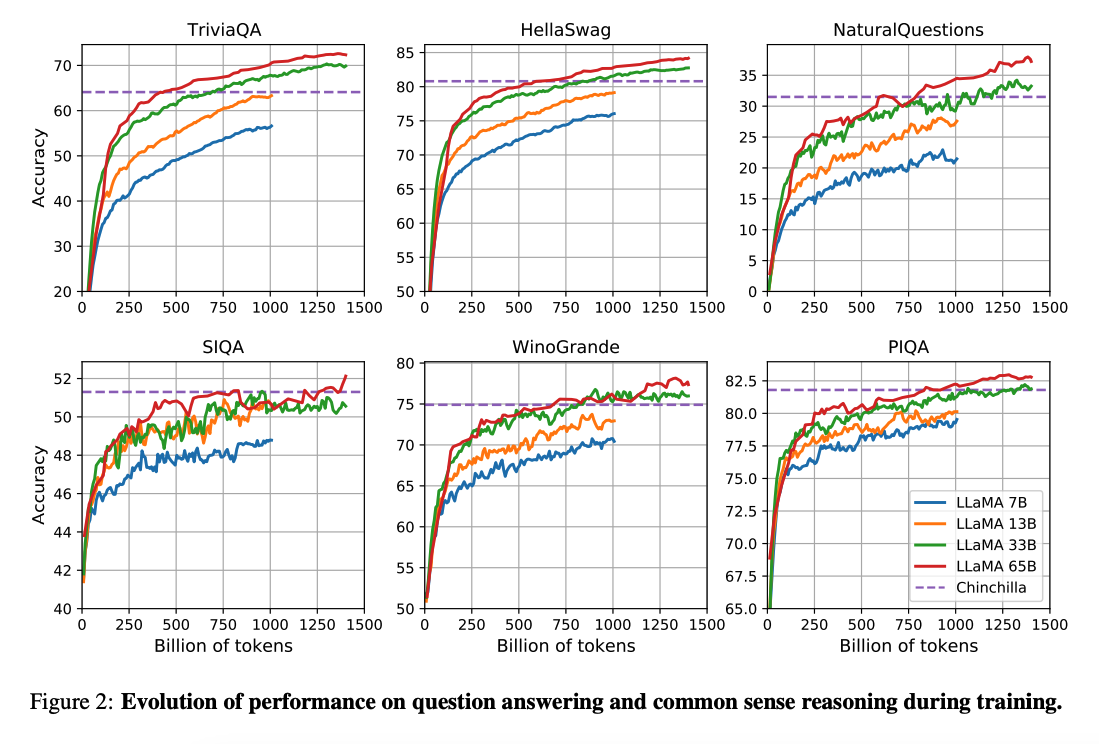

The work by (Hoffman et al. 2022) shows that given a compute budget, smaller models trained on a lot more data can achieve better performance than larger counterparts. This work recommends training 10B models on 200B tokens.

Key Finding: However, the LLaMA paper finds that the performance of a 7B model continues to improve even after 1T tokens.

Research Focus

This work focuses on training models (LLaMA) that achieve the best possible performance at various inference budgets, by training on more tokens.

Capabilities & Key Results

Performance Comparison

LLaMA-13B outperforms GPT-3(175B) on many benchmarks despite being:

- 10x smaller

- Possible to run on a single GPU

LLaMA 65B is competitive with models like:

- Chinchilla-70B

- PaLM-540B

Resources

Paper

LLaMA: Open and Efficient Foundation Language Models

Code

References

April 2023

- Koala: A Dialogue Model for Academic Research

- Baize: An Open-Source Chat Model with Parameter-Efficient Tuning on Self-Chat Data

March 2023

- Vicuna: An Open-Source Chatbot Impressing GPT-4 with 90%* ChatGPT Quality

- LLaMA-Adapter: Efficient Fine-tuning of Language Models with Zero-init Attention

- GPT4All

- ChatDoctor: A Medical Chat Model Fine-tuned on LLaMA Model using Medical Domain Knowledge

- Stanford Alpaca

Key Takeaways

- Efficient Scaling: Smaller models trained on more data can outperform larger counterparts

- Continuous Improvement: 7B model performance improves even after 1T tokens

- Cost-Effective: LLaMA-13B outperforms GPT-3(175B) while being 10x smaller

- Single GPU Compatible: LLaMA-13B can run on a single GPU

- Competitive Performance: LLaMA 65B competes with state-of-the-art models

- Open Foundation: Provides base for many derivative models and applications