Directional Stimulus Prompting

Overview

Li et al., (2023) proposes a new prompting technique to better guide the LLM in generating the desired summary.

A tuneable policy LM is trained to generate the stimulus/hint. Seeing more use of RL to optimize LLMs.

How It Works

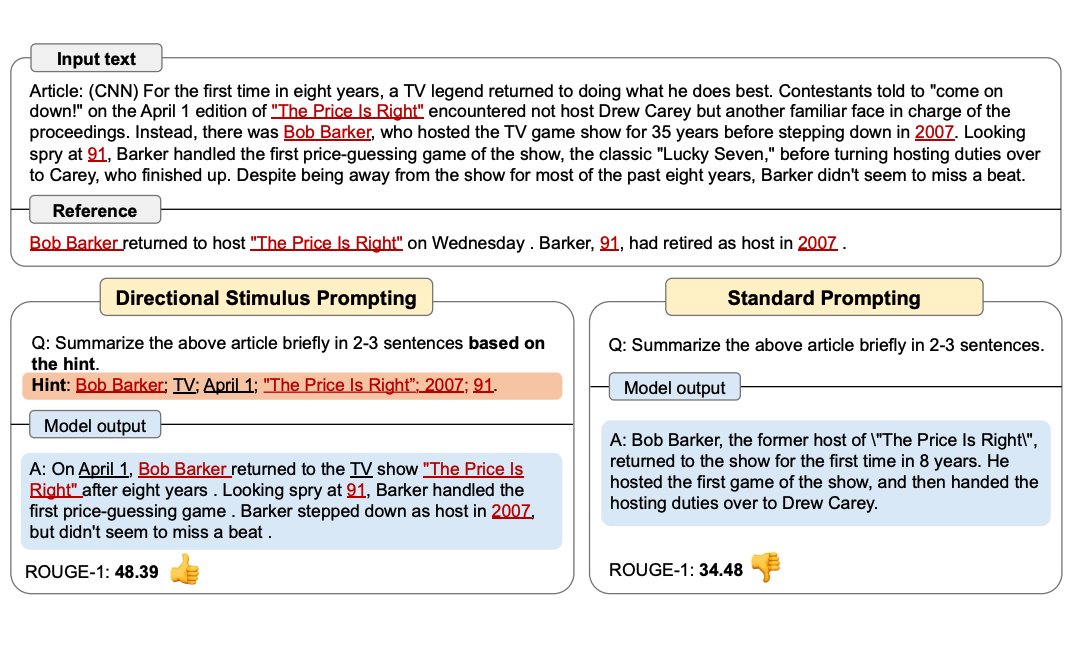

The figure below shows how Directional Stimulus Prompting compares with standard prompting. The policy LM can be small and optimized to generate the hints that guide a black-box frozen LLM.

Image Source: Li et al., (2023)

Key Components

- Policy LM: A small, trainable language model that generates directional hints

- Frozen LLM: The main model that receives hints to guide generation

- RL Optimization: Uses reinforcement learning to optimize the policy LM

- Hint Generation: Creates specific stimuli to guide the target task

Advantages

- Controlled Generation: Provides specific guidance for desired outputs

- Efficient Training: Only the small policy LM needs to be trained

- Flexible Hints: Can generate various types of directional stimuli

- Black-Box Compatibility: Works with any frozen LLM

Applications

- Text Summarization: Guiding summary generation with specific focus areas

- Content Generation: Directing creative writing or content creation

- Task-Specific Guidance: Providing hints for specialized tasks

- Quality Control: Ensuring outputs meet specific criteria

Current Status

Full example coming soon!

Related Topics

- Chain-of-Thought Prompting - Understanding reasoning techniques

- Few-Shot Prompting - Learning from examples

- Prompt Engineering Guide - General prompt engineering techniques

References

- Li et al., (2023) - Directional Stimulus Prompting